Wow.

Maemo news

There have been a few interesting Maemo announcements lately. See the links below or Planet Maemo for a more complete story. The N810 form factor with HSPA, a faster processor and of course a open software stack could be an amazing device.

http://jaaksi.blogspot.com/2008/09/osim-news-whats-up-with-maemo.html

http://tabletblog.com/2008/09/maemo-summit-fremantle-and-what-it.html

Sunday morning links

Busyness vs. Burst: Why Corporate Web Workers Look Unproductive: Different ways of working.

Don’t be afraid of change: An interesting description of business model changes at Chesspark.

BearHugCamp For Those Who Missed It: Notes from a recent meeting on Microblogging. Lots of good XMPP and OMB information.

A few east coast pictures

2018: Life on the Net panel

The above is a video of an interesting panel discussion at Fortune Brainstorm. The discussion ranges from governmental threats to the Internet to the value being captured by the carriers on the mobile Internet. Panellists include Lawrence Lessig, professor of Law at Stanford Law School, Joichi Ito, CEO of Creative Commons and Chairman of Six Apart Japan, and Philip Rosedale, founder and chairman of Linden Lab, (Second Life).

More fun with DNS packet captures

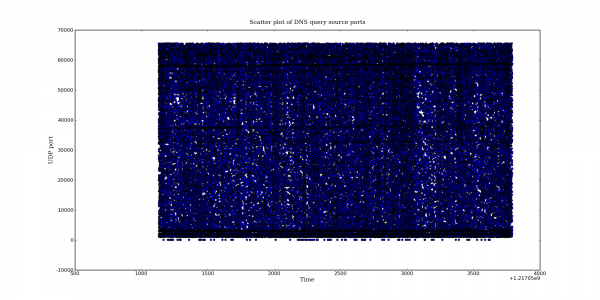

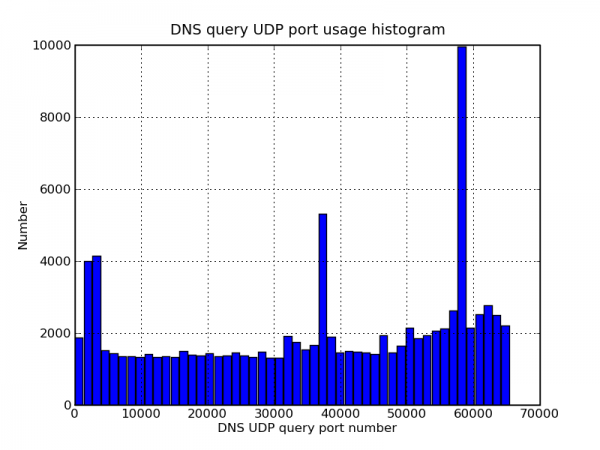

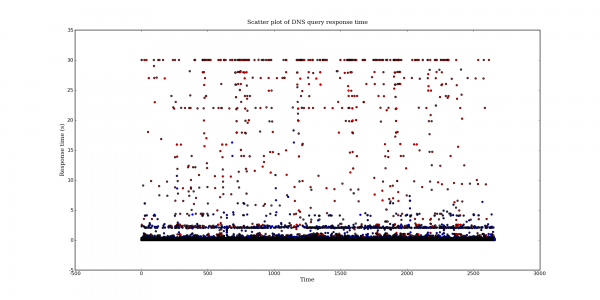

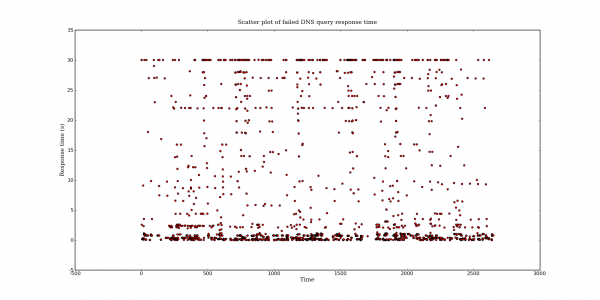

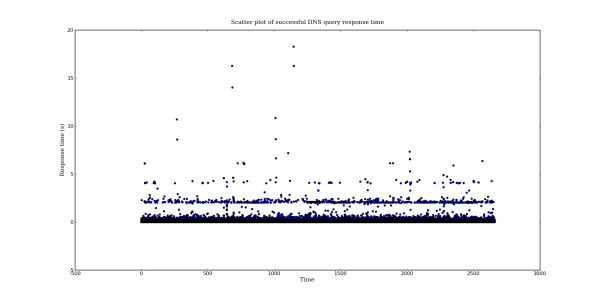

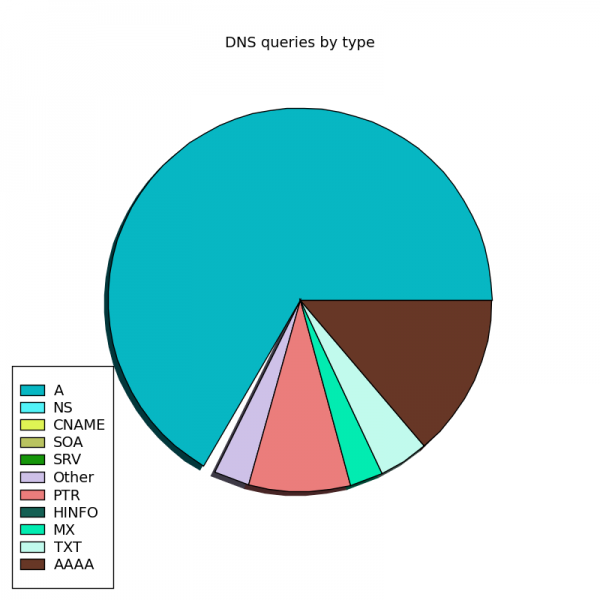

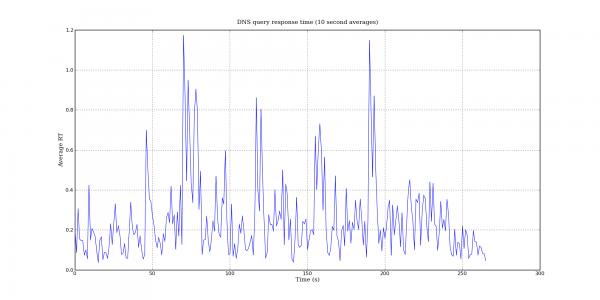

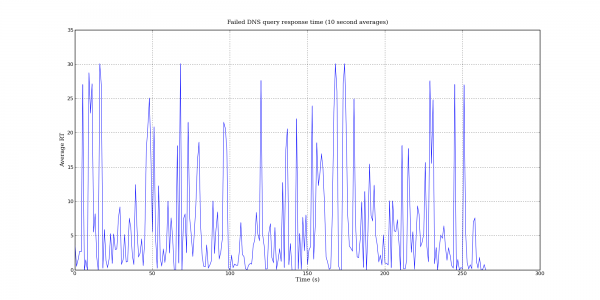

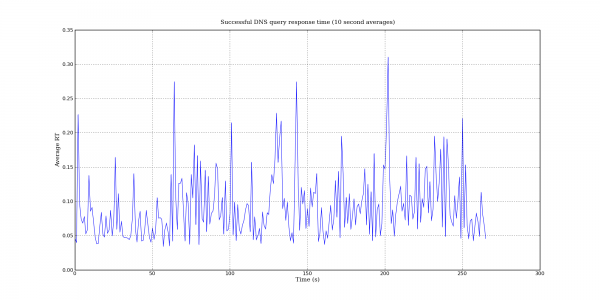

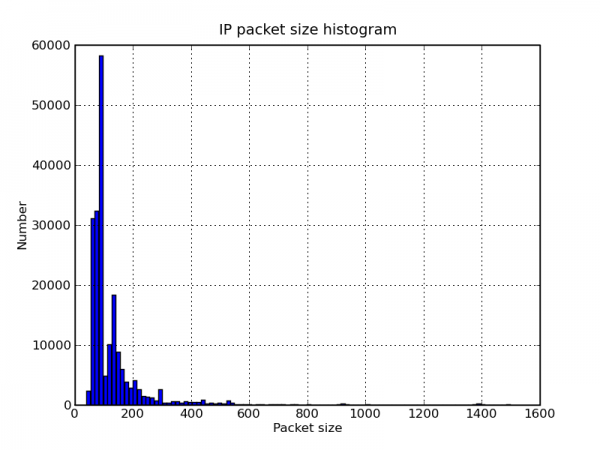

Following my last post on DNS query port usage, here are some more interesting DNS graphs.

The following graphs are based on a packet capture taken from the network interface of a recursive DNS server. This DNS server is one of the primary recursive DNS servers for a small Internet service provider. The capture includes all UDP DNS traffic to the DNS server as well as UDP DNS traffic from the DNS server to addresses within the local AS.

/usr/sbin/capinfos local.pcap File name: local.pcap File type: Wireshark/tcpdump/... - libpcap File encapsulation: Ethernet Number of packets: 200000 File size: 30702100 bytes Data size: 27502076 bytes Capture duration: 2659.328827 seconds Start time: Sat Jul 26 01:45:31 2008 End time: Sat Jul 26 02:29:50 2008 Data rate: 10341.74 bytes/s Data rate: 82733.89 bits/s Average packet size: 137.51 bytes

DNS query UDP source port graphs

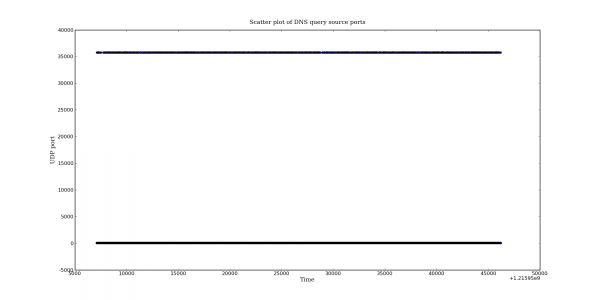

Recently Dan Kaminsky announced a new DNS vulnerability. This isn’t a vulnerability in a particular DNS implementation but a problem with the DNS protocol itself. You can find information from CERT here. The exact details of the vulnerability were kept quiet even after DNS software vendors simultaneouslly released patches to mitigate the problem. One of the main changes made by these patches was to increase the number of source ports used for outgoing queries to other DNS servers. From this information it was wildly speculated that the vulnerability is related to cache poisoning.

Perhaps partly due to an accidental, early release of information the full vulnerability details are now available.

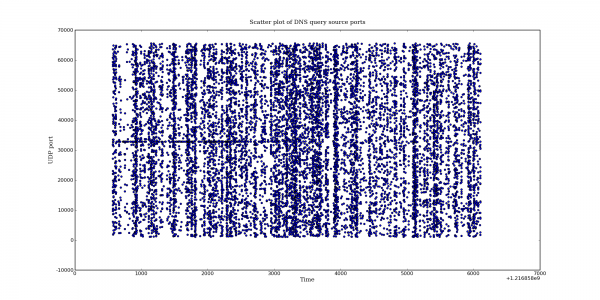

I happened to have some DNS captures available from before and after the patch was applied so I thought it might be interesting to graph the UDP query port usage behaviour for before and after the patch. The graphs presented below come from a RHEL 5.2 based DNS server. The post-patch DNS server version is bind-9.3.4-6.0.2.P1.el5_2. I don’t have the pre-patch version number handy but presumably it is the previous Bind package released by RedHat. Both of the captures came from the same DNS server but note that the capture length is different.

The difference is quite dramatic. Bind appears to be making good use of almost the entire port space.

Also note the interesting banding in the second graph. This behavior is not limited to the new patch. I have noticed this in other pre-patch captures as well. More on that later.

Search Engine on CBC

This probably isn’t news to many people by now but CBC’s Search Engine will not be returning in the fall. What a loss. To me Search Engine is a great example of what a radio show and Podcast can be. The show had strong audience participation and felt almost more like a blog post than a traditional radio show. More importantly, Search Engine covered digital issues such as Copyright reform in a way that is greatly needed at this time.

I really hope that CBC will reconsider this cancellation. Public broadcasters need to bring in young people and new listeners. A new and experimental format like Search Engine is a great way to accomplish this. The huge amount of interest in this spring’s Copyright reform bill shows that many Canadians are becoming aware of the topics Search Engine covered. Now is not the time to give up on this show.

Fortunately it looks like Search Engine’s sister show, Spark, is still going to continue.

Chicago (U-505)

About a month ago Karen and I spent the weekend in Chicago. If you haven’t been to Chicago I would recommend it. We had a great time.

One of the highlights and quite possibly the coolest museum exhibit I’ve seen was U-505 at the Museum of Science and Industry. This was worth the trip by itself. I have a bunch more pictures here.

IPv6

For the first time in almost month I had a bit of free time for experimentation today so I decided it was time I set up my home network to use IPv6. I’ve tried to keep up on the development and deployment of IPv6 but besides setting up a few internal network nodes with IPv6 addresses I haven’t played with it much in the past.

Background

Before I get into configuration here are a few links to some of the better articles and videos that I discovered today. Some of these are pretty technical.

Current projections on the time frame for IPv4 address exhaustion.

What this prediction is saying is that some time between late 2009 and late 2011, and most likely in mid-2010, when you ask your local RIR for another allocation of IPv4 addresses your request is going to be denied.

This article describes 6to4 and Teredo which are two technologies that aim to ease the transition from IPv4 to IPv6.

IPv6 Deployment: Just where are we?

Current IPv6 usage estimations.

Videos from Google’s IPv6 conference in May 2008.

IPv6 content

Other than gaining some experience with IPv6 there really isn’t a lot of benefit to using IPv6 yet. However, if you are in any way involved with IP networking it may be time to start learning about IPv6. Current projections have the IPv4 address space being exhausted in mid-2010 (The End of the (IPv4) World).

At the moment there are very few sites available which are accessible via IPv6 and even fewer are IPv6 only. Google has setup an IPv6 version of their main search site at ipv6.google.com. It is unfortunate that Google does not have an IPv6 (AAAA) record for www.google.com yet but given that providing an AAAA record to some hosts without IPv6 connectivity can cause problems, their choice is not surprising. Hopefully these kinks can be worked out as more people gain experience with IPv6. Rather then trying to remember to type ipv6.google.com all of the time I have locally aliased www.google.com to ipv6.google.com. Everything seems to be working normally so far.

SixXS maintains a list of IPv6 content at Cool IPv6 Stuff. A couple of highlights from this list are the official Beijing 2008 Olympic website and some IPv6 only BitTorrent trackers.

It has been said that the availability of porn is what really drove the adoption of the Internet. In this spirit The Great IPv6 Experiment is collecting copyright licenses to a large amount of commercial pornography and regular television shows for distribution only via IPv6. The project is due to launch sometime “soon”. What a great idea for an experiment.

A short tutorial for IPv6 and 6to4 on Fedora 9

Getting things setup turned out to be pretty easy on Fedora. I expect the same is true of any Linux distribution although the details will differ. Since most ISPs do not have native IPv6 support, special technologies are required to connect to other IPv6 nodes over IPv4. I chose 6to4 to connect to the IPv6 network. 6to4 requires a public IP address so if you are behind NAT look into using Teredo instead. Incidentally, Teredo is supported by Windows Vista.

The first step is to enable IPv6 and 6to4 on the publicly facing network interface. The required configuration file can be found in /etc/sysconfig/network-scripts/ifcfg-XXX where XXX is the name of your publicly facing network interface. Some or all of this may be configurable through system-config-network and other GUI tools but I tend to stick to configuration files. I added the following lines:

IPV6INIT=yes IPV6TO4INIT=yes IPV6_CONTROL_RADVD=yes

A few new entries were also required in the global network configuration (/etc/sysconfig/network).

NETWORKING_IPV6=yes IPV6FORWARDING=yes IPV6_ROUTER=yes IPV6_DEFAULTDEV="tun6to4"

Note that if the computer you are configuring is not going to act as a IPv6 gateway for other hosts on your network you probably don’t want to add IPV6FORWARDING and IPV6_ROUTER. After editing these files restart the network service.

/sbin/service network restart

You should now have a new network interface named tun6to4 with an IPv6 address starting with 2002 assigned to it. 2002 (hexadecimal notation) is the first sixteen bits of the IPv6 address space dedicated to 6to4.

/sbin/ifconfig tun6to4

Now try pinging an IPv6 addresses.

ping6 ipv6.google.com

If you can reach ipv6.google.com you have working IPv6 connectivity. If you are not configuring an IPv6 gateway you can ignore everything below this point.

In order for the configured host to act as a gateway for IPv6 traffic it needs to advertise the IPv6 network prefix to the rest of your network. IPv6 doesn’t require DHCP for automatic address configuration but does require prefix announcement so the local node can figure out its IPv6 address. Prefix advertisements are handled by the radvd daemon. Below is the configuration I used (/etc/radvd.conf). Note the leading zeros in the prefix. This indicates that radvd should create the IPv6 prefix using the special 6to4 format.

interface eth0

{

AdvSendAdvert on;

MinRtrAdvInterval 30;

MaxRtrAdvInterval 100;

prefix 0:0:0:0001::/64

{

AdvOnLink on;

AdvAutonomous on;

AdvRouterAddr off;

Base6to4Interface ppp0;

AdvPreferredLifetime 120;

AdvValidLifetime 300;

};

};

After restarting radvd the other IPv6 capable nodes on your local network should also be automatically assigned an IPv6 address starting with 2002.

/sbin/service radvd start

I’m not sure if this is the way it is supposed to work or not, but eth0 on my gateway never obtains a 2002 IPv6 address automatically (this is box radvd is running on). As a result, I assigned the IPv6 address manually. Since my external IPv4 address never changes this isn’t a problem for me but it seems wrong to have to manually change the interface address if the external IPv4 address changes even though radvd will correctly advertise the new IPv6 prefix to the rest of the network automatically.

If you already know how IPv6 addresses are constructed skip this paragraph. In what will likely be the most common deployment model, IPv6 addresses are constructed of two parts: a 64-bit network identifier (prefix) and a 64-bit host identifier. The network identifier is assigned by the ISP. In the case of 6to4 the network prefix is constructed by using your public IPv4 address in combination with the first sixteen bits of the address being set to 2002. The host or node identifier is constructed by extending the 48-bit MAC address to 64-bits.

Determining the IPv6 address to assign to the internal interface (eth0) is a little tricky. First get the network prefix portion of the IPv6 address assigned to the tun6to4 interface. You want everything before the /16. This is the first 64-bits of your IPv6 address. Then look at the link-local IPv6 address which is automatically created on eth0. This address will start with fe80. The last 64-bits of this address is also the last 64-bits of the new address because this is the MAC address of the network interface. Copy everything after “fe80::”. Append this to the previously obtained network prefix separating the values with a colon. You now have the IPv6 address. Append an “IPV6ADDR=” line to /etc/sysconfig/network-scripts/ifcfg-eth0 and restart the network service (or the interface only if you like). You should now be able to ping6 between network nodes using the 2002 prefixed IPv6 addresses.

Once you have established connectivity between the nodes try ping6ing ipv6.google.com from the internal network nodes. If the ping fails you will likely have to investigate the iptables and ip6tables rules on both the gateway and the internal nodes.