Some time ago I started writing a blog post to help myself better understand where packets can be queued within the Linux kernel. This relates to my long time interest in optimizing for latency and experimenting with the kernel’s QoS features. By the time I was ready to hit the publish button, the blog post was several thousand words long and I had gotten some nice feedback so I decided to submit it to Linux Journal instead. If you are a Linux Journal subscriber you can now find the article in the July 2013 issue which has a focus on Networking.

Tag Archives: Networking

An introduction to SDN

System virtualization moves the edge of the network

One of the biggest innovations of the Internet was moving the intelligence from the network to the edge devices. Making the end host responsible for data delivery and creating a network architecture that is application agnostic were radical and incredibly successful ideas. Although much of the architecture made this switch the demarcation between the network owner and its users forced some features such as access control and provisioning to remain in the access routers and switches. Given the relationship of consumers to their service providers this will probably never change in the consumer Internet market but something very interesting is happening within data centres due to virtualization.

Moving to the Edge: An ACM CTO Roundtable on Network Virtualization

One of the most interesting ideas in the discussion linked to above is that the advent of system virtualization necessarily moves the point of enforcement, or intelligence, from the network access layer into the host itself. This comes in the form of the networking features of hypervisors. Hypervisors implement switching and routing but what’s really interesting is that they are also the best location for functions such as firewalls because implementing these functions as separate devices greatly limits the flexibility of the virtualized data centre. Imagine migrating a VM anywhere the data center and having its firewall rules follow automatically to the new host vs having to choose amongst N hosts which are behind the same firewall.

Two groups may be affected greatly by this change: network equipment vendors and IT networking professionals.

I do not believe that owners of existing network infrastructure need to worry about the hardware they already have in place. Chances are your existing network infrastructure provides adequate bandwidth. Longer term, networking functions are being pulled into software, and you can probably keep your infrastructure. The reason you buy hardware the next time will be because you need more bandwidth or less latency. It will not be because you need some virtualization function. (Martin Casado)

The above argues that existing network switches and routers are already good enough for this new architecture. That is, networking equipment will become further commoditized which may not be good from the perspective of Cisco and other equipment vendors.

What about switch and router experts?

The people who will be left out in the cold are the folks in IT who have built their careers tuning switches. As the edge moves into the server where enforcement is significantly improved, there will be new interfaces that we’ve not yet seen. It will not be a world of discover, learn, and snoop; it will be a world of know and cause. (Lin Nease)

and

There’s a contention over who’s providing the network edge inside the server. It’s clearly going inside the server and is forever gone from a dedicated network device. A server-based architecture will eventually emerge providing network-management edge control that will have an API for edge functionality, as well as an enforcement point. The only question in my mind is what will shake out with NICs, I/O virtualization, virtual bridges, etc. Soft switches are here to stay, and I believe the whole NIC thing is going to be an option in which only a few will partake. The services provided by software are what is of value here, and Moore’s law has cheapened CPU cycles enough to make it worthwhile to burn switching cycles inside the server.

If I’m a network guy in IT, I better much more intensely learn the concept of port groups, how VMware, Xen, etc. work, and then figure out how to get control of the password and get on the edge. Those folks now have options that they have never had before.

The guys managing the servers are not qualified to lead on this because they don’t understand the concept of a single shared network. They think in terms of bandwidth and VPLS (virtual private LAN service) instead of thinking about the network as one system that everybody shares and is way oversubscribed. (Lin Nease)

Of course networking experts will still be required but this new world may involve spending a lot more time managing servers than at the router/switch CLI.

The simple network continues to win.

Making the Linux flow classifier tunnel aware

Flow Classifier

The Linux kernel has many different tools for managing traffic. One of them is the flow classifier which allows the user to configure which fields of the packet headers should be used to create a hash which is then used to identify flows and manage them. For example, if the user selects src,dst,proto,proto-src,proto-dst they get a unique value for each flow (within the limits of the hash). Alternatively, using only src as the key will result in all flows being grouped by the source IP address.

The Problem

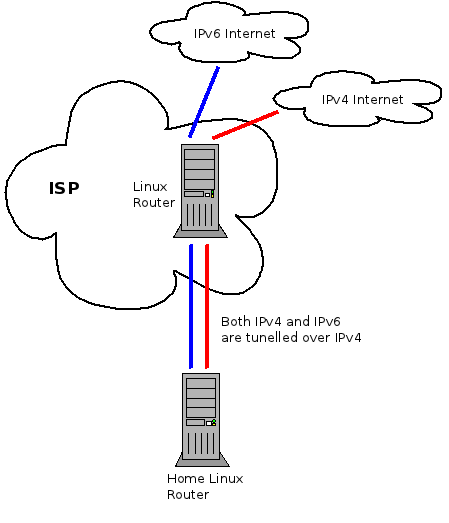

Below is a slightly simplified version of my home network.

All of the traffic, both IPv4 and IPv6, is tunnelled through a Linux router which lives at my service provider. The reason for this complicated setup is that it gives me control of the traffic in both the upstream and downstream. By shaping the traffic to just below the maximum rate in each direction I am able to avoid Bufferbloat problems and prioritize latency sensitive traffic such as SSH, DNS and Vonage. Especially under load, my QoS scripts make marked difference in how fast the Internet feels.

The multiple tunnels present a problem for implementing my QoS scheme because from the perspective of the underlying interface there are only two flows on the network. One for the IP-IP tunnel and one for the IP-IPv6 tunnel. A work around I used for a while was to apply the QoS rules to the IP-IP tunnel interface because that’s where the bulk of the traffic flows. However, this meant that IPv6 traffic was not properly controlled and any time I had a significant amount of IPv6 traffic I lost all the advantages of my QoS scheme.

To solve this properly I needed a way to look into the tunnels in order to identify the inner network flows. So I’ve extended the flow classifier with the keys in the following table. IP-IP, IP-IPv6, IPv6-IP and IPv6-IPv6 tunnels are supported.

| Key | Description |

| tunnel-src | Extract the source IP from the inner header |

| tunnel-dst | Extract the destination IP from the inner header |

| tunnel-proto | Extract the protocol from the inner header |

| tunnel-proto-src | Extract the transport protocol source port from the inner header |

| tunnel-proto-dst | Extract the transport protocol destination port from the inner header |

Results

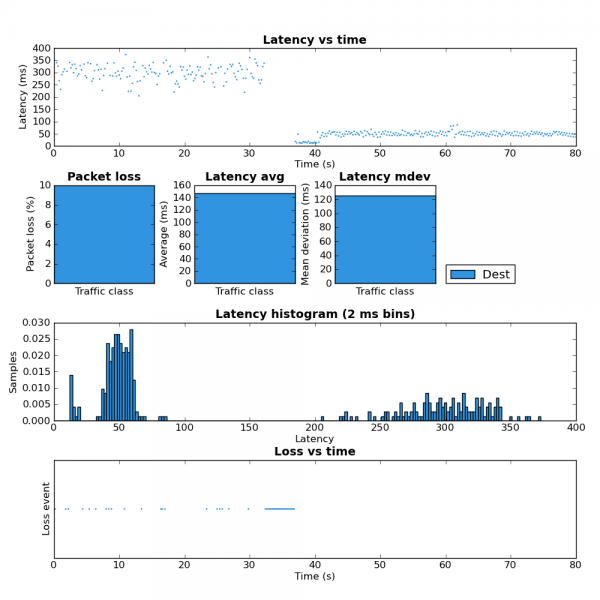

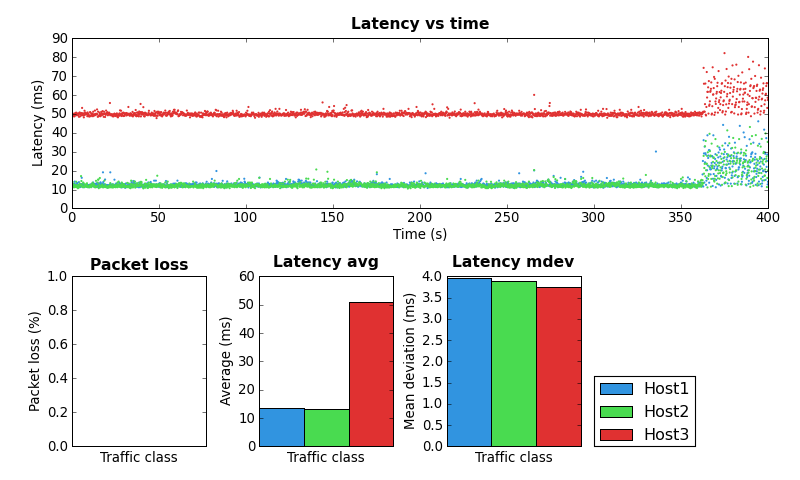

In order to validate that this works I started a couple SCP uploads to max the upstream bandwidth and then ran ping-exp to measure the latency. At the start of the test the flow classifier keys were src,dst,proto,proto-src,proto-dst. Approximately half way through I changed the keys to src,dst,proto,proto-src,proto-dst,tunnel-src,tunnel-dst,tunnel-proto,tunnel-proto-src,tunnel-proto-dst. The advantage of keeping the non-tunnel keys is that any traffic created by the router itself is still classified properly. Here is the tc script I used. You can see the results of this test in the figure 2 below.

For the first half of the test you can see the high latency. This is due to all the traffic from the SCP upload and ICMP pings being placed into the same queue because from the perspective of the flow classifier there is only one flow. In the second half of the test the addition of the tunnel keys allows the flow classifier to place the ICMP packets into a different queue which is not affected by the SCP upload and therefore has much lower latency. The large amount of packet loss during the key change is because the script I used creates a large number of queues. While these queues are being created packets are dropped.

While my network setup may be a bit unique I think it’s likely that many home networks will have some form of tunnelling in the near future as tunnels are part of several IPv6 migration strategies. So hopefully this little addition will be useful in many different contexts.

Below are links to the two patches that are required. I’ll post them to Netdev for review shortly.

Linux flow classifier proto-dst and TOS

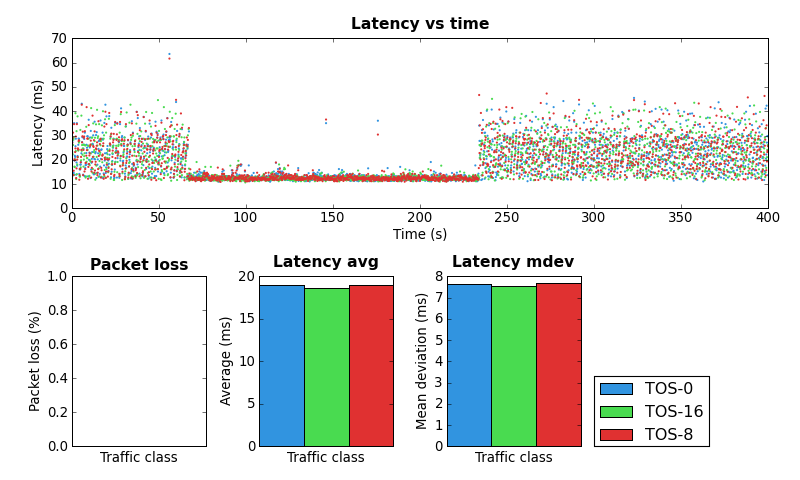

Recently I’ve been playing around with the Linux flow classifier on my gateway. The flow classifier provides the ability to group network flows by configuring which parts of the packet headers (referred to as keys) are used in a hash calculation which chooses the output queue.

All of my Internet traffic travels over an IPIP tunnel to another Linux box. I do this so I have control of the QoS in both the upstream and the downstream. A result of this configuration is that from the perspective of the output interface there is only a single network flow.

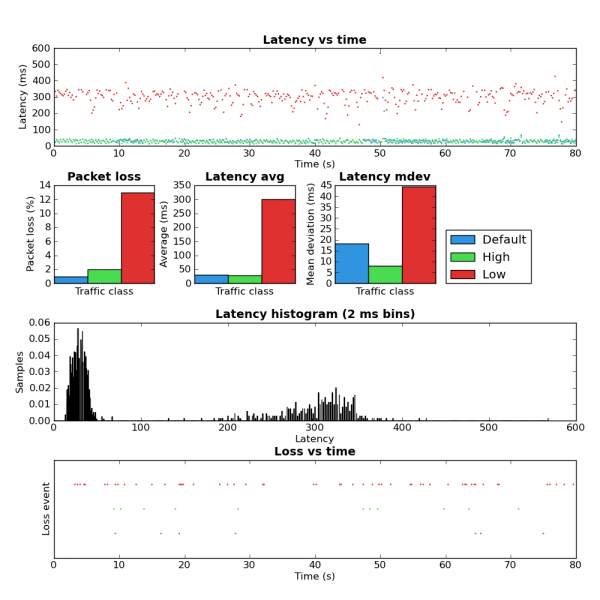

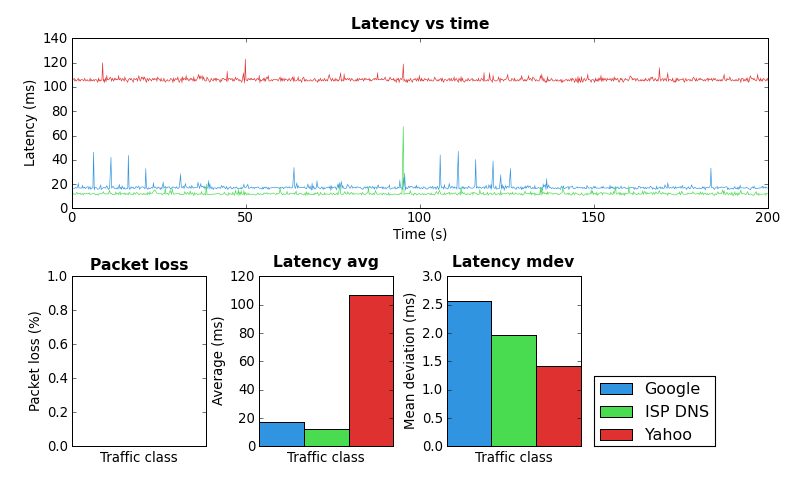

I configured the flow classifier to use the src,dst,proto,proto-src,proto-dst keys which aims to provide 5-tuple flow fairness. Here’s the simple tc script I used. Due to the IPIP tunnel I expected to see that all traffic would be placed into the same queue. Strangely, the below is what my little ping-exp utility showed when running at the same time as an SCP upload.

Coincidentally I ran ping-exp configured to send three different streams of ICMP traffic with different IP TOS values. Note that SCP automatically sets the IP TOS to the equivalent of the “Low” stream in the test.

Notice that the pings using the high and default TOS values appear to be unaffected by low priority ping and SCP traffic. This was unexpected because none of src,dst,proto,proto-src or proto-dst keys should be affected by the TOS value.

After a bit of experimentation I determined that the proto-dst key was the source of the problem. If you spend a bit of time with the flow_get_proto_dst() function in cls_flow.c you’ll see that if the protocol is ICMP or IPIP, as it is in my test, then the following value is returned:

return addr_fold(skb_dst(skb)) ^ (__force u16)skb->protocol;

skb_dst() returns a pointer to a dst_entry structure. Since Linux maintains separate dst_entry structures for each destination,TOS pair the source of the unexpected behaviour is obvious.

I’m not knowledgeable enough about the Linux network stack to be certain but I don’t see any value in returning a value for proto-dst which is random with respect to the actual traffic on the wire. At the very least this is not intuitive behaviour.

If you look at flow_get_proto_src() you’ll see something similar:

return addr_fold(skb->sk);

In this case a pointer to the local socket structure is used as a fallback. Again, this has no relation to the actual packets on the wire and if the packet does not originate at the local machine then no socket exists which causes this value to be zero anyway.

It seems to me that the most intuitive behaviour would be to have the proto-src and proto-dst keys return zero when they are applied to traffic that doesn’t have the notion of transport layer ports.

I’ll post to Netdev about this and see what the kernel devs have to say.

Related to this, I have a patch to the flow classifier that adds tunnel awareness which I plan post to Netdev this weekend as well.

Fixed: pfifo_fast and ECN

Last weekend I wrote up a problem I discovered with the Linux pfifo_fast QDisc and ECN. This resulted in discussions on the Bufferbloat and Netdev lists and eventually a bug fix which is now in the net-next tree and will eventually be in the mainline kernel.

Cool!

Linux SFQ experimentation

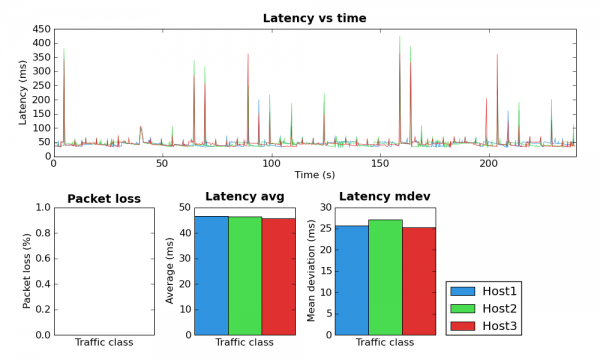

I’ve been doing some more experimentation with Linux QoS configurations using my ping-exp utility. Today I noticed that whenever I add a SFQ to the configuration there are large latency spikes. After a bit of digging it appears that these spikes happen when the SFQ changes its flow hash. This occurs every perturb interval as configured when the SFQ is created.

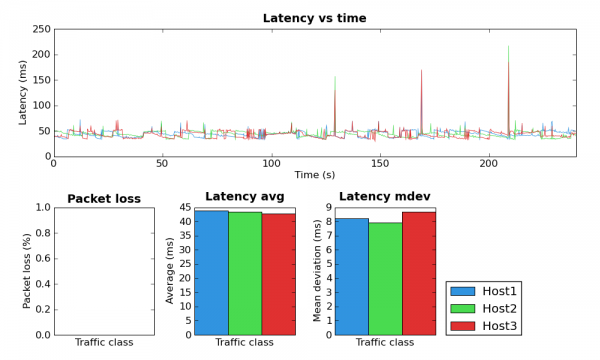

Below are the results from a couple experiments which show this behavior. For both experiments I had two outbound ping floods of MTU sized packets. This saturated the outbound link. The experiment itself pinged three other hosts. I made sure to use four distinct hosts (one for flood, three for the experiment) to avoid collisions in the SFQs flow hash.

The PNGs below are not ideal for detailed inspection of the graphs. However, you can also download the data files from the experiment and load them using ping-exp. This allows zooming in on the graph. See the links at the end.

The above graph is based on an experiment where the perturb value was set to five seconds. Although the large latency spikes do not occur at every five second interval, when they do occur they are on the five second grid.

The second experiment used a perturb time of twenty seconds. Again, the latency spikes do not occur every twenty seconds but they do occur on the twenty second grid.

During the experiment I ran a packet capture to make sure there wasn’t any activity that might skew the results. The amount of captured traffic was very small.

The network I performed this experiment on consists of a P3-450 Linux gateway where the QoS configuration is applied to the ppp0 device. The kernel version is 2.6.27.24-170.2.68.fc10.i686. A host behind the gateway was used to generate the ping floods and run ping-exp.

Configuration and data files

HTB SFQ limit 10 perturb 5 script

HTB SFQ limit 10 perturb 5 ping-exp data file

ping-exp: Ping experiment utility

Recently I’ve been playing with Linux’s QoS features in order to make my home Internet service a little better. Since I’m primarily interested in latency I used ping to benchmark the various configurations. This works reasonably well but it quickly becomes hard to compare the results.

So I decided to build a tool to perform several ping experiments, store the results and graph them. The result of this work is ping-exp.

At present ping-exp can vary the destination host name as well as the TOS field. The interval between pings and total number of pings is globally configurable. The results can be written to a file to be loaded later, output to a PNG or both. Line and scatter plots are supported. When not writing the image to a file ping-exp displays the graph using Matplotlib’s default graph viewer. This allows zooming in on interesting parts of the graph. In the future I’d like to add the ability to specify the ping packet size.

As an aside, Python and Matplotlib make this kind of stuff so much fun.

Below are a few graphs created by ping-exp.

A new way to look at networking

I finally got around to watching A new way to look at networking yesterday. This is a talk given by Van Jacobson at Google in 2006 (yes, it has been on my todo list for a long time).This is definitely worth watching if you are interested in networking.

A couple of quick comments (These are not particularly deep or anything. This is mostly for my own reference later.):

- He says that the current Internet was designed for conversations between end nodes but we’re using it for information dissemination.

- Me: This distinction relies on the data being disseminated to each user being identical. However, in the vast majority of cases even data that on the surface is identical such as web site content is actually unique for each visitor. Any site with advertisements or with customizable features are good examples. As a result we are still using the Internet for conversations in most situations.

- He outlines the development of networking:

- The phone network was about connecting wires. Conversations were implicit.

- The Internet added metadata (the source and destination) to the data which allowed for a much more resilient network to be created. The Internet is about conversations between end nodes.

- He wants to add another layer where content is addressable rather than the source or destination.

- He argues for making implicit information explicit so the network can make more intelligent decisions.

- This is what IP did by adding the source and destination to data.

- His idea of identifying the data not the source or destination is very interesting. A consequences of this model is that data must be immutable, identifiable and build in metadata such as the version and the date. It strikes me how the internal operation of the Git version control system matches these requirements.

- At the moment I write this cc7feea39bed2951cc29af3ad642f39a99dfe8d3 uniquely identifies the current version (content) of Linus’s kernel development tree.