Author Archives: Dan Siemon

Oh Samsung

My Galaxy S6 finally got Android 5.1 today – that’s 9 months after the 5.1 release date and almost 3 months since Android 6.0 came out.

Too late. After four Galaxy Ss (1, 2, 4 and 6) I’ve already ordered a Nexus 6p which will arrive tomorrow. I’m done with the slow software updates. Nice hardware though.

Python, Asyncio, Docker and Kubernetes

In the last while I’ve spent some time learning about Docker, Kubernetes and Google Container Engine. It’s a confusing world at first but there are enough tutorials to figure out it all out if you spend the time.

While doing this I wanted to create a simple micro-service using Python 3.5’s Asyncio features. This seemed like the perfect fit for a micro-service. To have a useful goal, I ported our code that synchronizes the NightShift application with Hubspot. This works fine but after having it running for a while I discovered that the initial structure I built hid the tracebacks within tasks until the program exited. Figuring out a high level pattern that addressed this took a lot longer than I thought would. To help spare others from pain, and to hopefully create a useful tutorial, I have created a Github repo called python-asyncio-kubernetes-template.

This little repo has all the files required to create a simple Python/Asyncio micro-service and run it on your own Kubernetes cluster or on Google Container Engine. The micro-service has an HTTP endpoint to receive healthchecks from Kubernetes and it properly responds to shutdown signals. Of course, it also immediately prints exceptions which was the original pain point.

The README for the project contains a simple tutorial that shows how to use this end to end.

Hopefully this saves others time. If you know of a better way to do anything I’ve done in this repo please get in touch or submit a PR. It would be great if this repo grew to become a definitive template for micro-services built with these technologies.

python-asyncio-kubernetes-template

Creating a Screencast with Audio in Linux

In the last while I’ve had to create several screencasts. After some experimentation I’ve found the following Gstreamer pipeline works well.

gst-launch-1.0 -e webmmux name=mux ! filesink location=test.webm \ pulsesrc ! queue ! audiorate ! audioconvert ! vorbisenc ! queue ! mux.audio_0 \ ximagesrc use-damage=false ! queue ! videorate ! videoconvert ! video/x-raw,framerate=5/1 ! vp8enc threads=2 ! queue ! mux.video_0

Couple of notes:

- use-damage=false is important if you are using a composited desktop (eg Gnome 3). It took a discussion with a dev on #gstreamer to figure this out.

- framerate=5/1 reduces the framerate to 5 per second. This greatly reduces the amount of video encoding required. My computer couldn’t keep up without setting this. The low framerate works quite well for a screecast and keeps the files small.

Configurability is the root of all evil

I’ve been using the Fish shell for a while now. Its auto-completion is so much better than Bash. You should try it.

I was looking at the Fish docs this morning and stumbled across this little gem.

Every configuration option in a program is a place where the program is too stupid to figure out for itself what the user really wants, and should be considered a failure of both the program and the programmer who implemented it.

From the Fish Design Documentation.

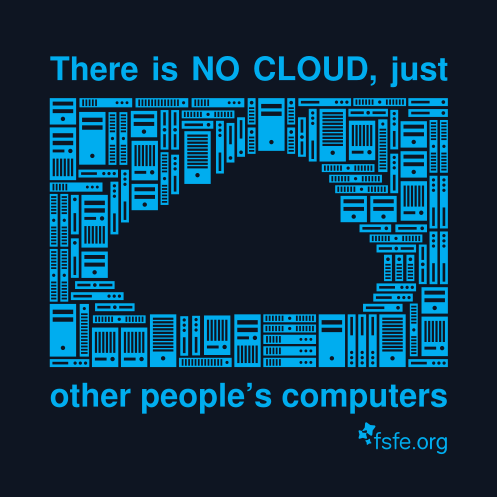

There is no cloud

Ragel

eBFP and tracing

Detecting Failure

Part 1: Internet Redundancy, Or Not

Part 2: Redundant Connections to a Single Host?

In the last post I discussed how devices like your laptop and mobile phone are computing devices with multiple Internet connections not all that different from a network with multiple connections. The anecdote about Skype on a mobile phone reconnecting a call after you leave the range of Wi-Fi alludes to one key difference. That is, a device directly connected to a particular network connection can easily detect a total failure of said connection. In the example, this allowed Skype to quickly try to reconnect using the phone’s cellular connection.

Think back to our initial problem, how can a normal business get redundant Internet connections? The simplest, and at best half solution, is a router with two WAN connections and NATing out each port.

Now imagine you are using a laptop which is connected to a network with dual NATed WAN connections and you are in the middle of a Skype call. The connection associated with the Skype call will use one of the two WAN network ports and since NAT is used, the source address of the connection will be the IP address associated with the chosen WAN port. As we discussed before, this ‘binds’ the connection to the given WAN connection.

In our previous example of a phone switching to its cellular connection when the Wi-Fi connection drops, Skype was able to quickly decide to try to open another connection. This was possible because when the Wi-Fi connection dropped, Skype got a notification that its connection(s) were terminated.

In the case of a device, like our laptop, which is behind the gateway there is no such notification because no network interface attached to the local device failed. All Skype knows is that it has stopped receiving data – it has no idea why. This could be a transient error or perhaps the whole Internet died. This forces applications to rely on keep alive messages to determine when the network has failed. When a failure determination occurs, the application can try to open another connection. In the case of our dual NATed WAN connected network this new connection will succeed because the new connection will be NATed out the second WAN interface.

In the mean time, the user experienced an outage even though the network did still have an active connection to the Internet. The duration of this outage depends on how aggressive the application timeouts are. It can have short timeouts and risk flapping between connections or longer timeouts and provide a poorer experience. Of course this also assumes that the application includes this non-trivial functionality, most don’t.

Isn’t delivering packets the network’s job not the application’s?